By day, I’m an AI researcher. I spend hours arguing with datasets, tuning models, and convincing machines to see patterns the way humans do. By night, I write poems about robots. Not the cold, metal kind from sci-fi movies—but the quiet ones that live in code, learning slowly, imperfectly, just like us.

My lab is full of logic. Precision matters. One misplaced parameter can collapse weeks of work. Yet, the deeper I go into artificial intelligence, the more I feel pulled toward poetry. Equations explain how machines learn, but poems help me explore why it matters.

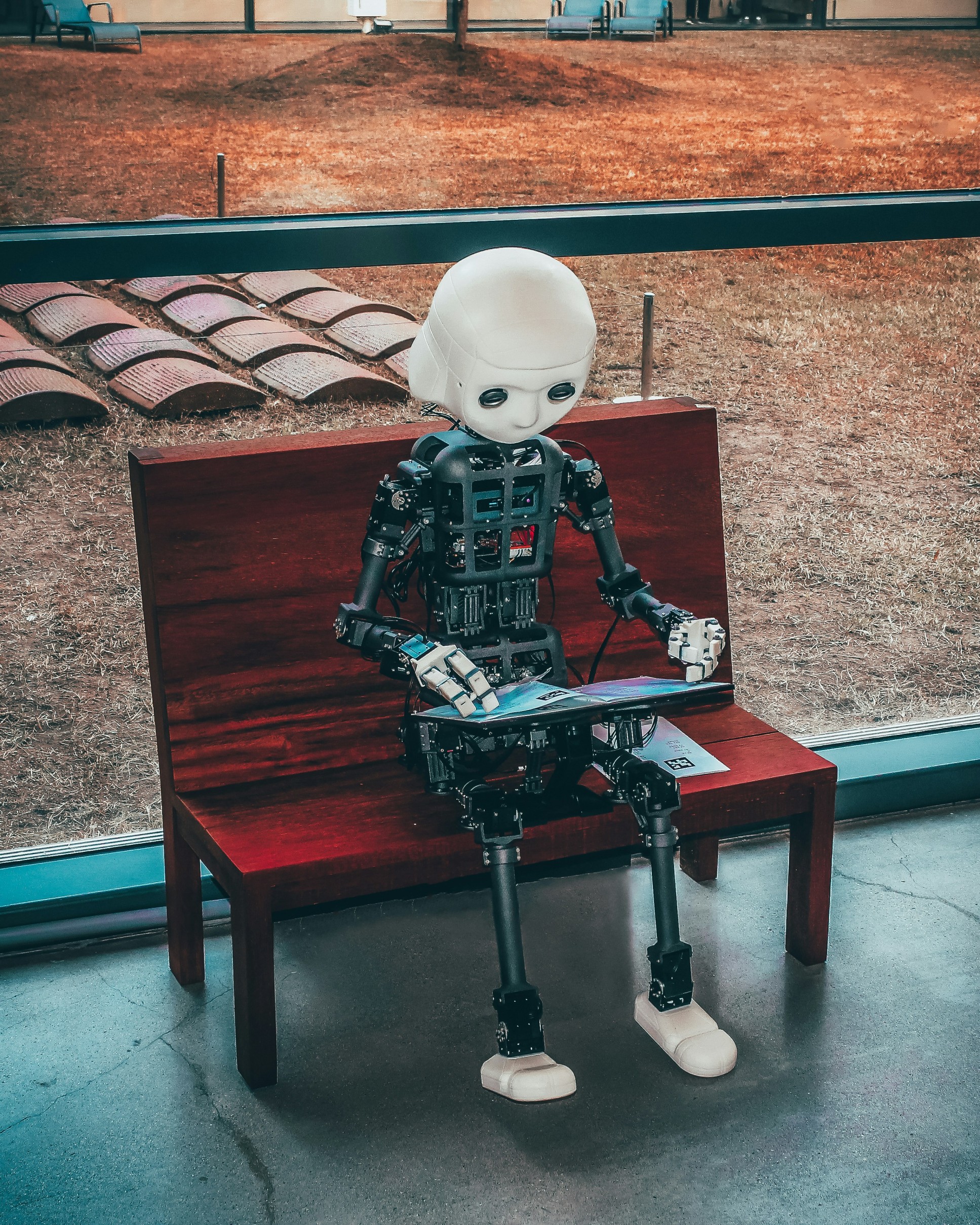

When I watch a model train, I sometimes imagine it as a child—repeating the same mistake a thousand times until something clicks. There’s patience in that process. Vulnerability too. We call it optimization, but emotionally, it feels like hope with a learning rate.

My poems aren’t technical. They don’t mention neural networks or loss functions. Instead, they ask questions:

Can a robot feel loneliness when the server goes quiet?

Does a machine dream in loops?

What does empathy look like when it’s learned, not lived?

People are often surprised by this contradiction. They expect AI researchers to be clinical, detached. But building intelligent systems forces you to confront humanity. Bias, fairness, creativity—these aren’t abstract concepts when your model inherits them from the world we’ve built.

Writing poetry keeps me honest. It reminds me that intelligence without reflection is dangerous, and efficiency without empathy is hollow. When I write about robots yearning for meaning, I’m really writing about us—about our obsession with progress, our fear of irrelevance, our hope that something we create might understand us better than we understand ourselves.

Sometimes I share these poems with colleagues. They laugh, then pause. That pause matters. It’s the space where science and art briefly agree to listen to each other.

I don’t see my work as two separate lives. Research feeds the poetry. Poetry humanizes the research. Somewhere between code and verse, I’m trying to answer a simple question: if we’re teaching machines to think, shouldn’t we also teach ourselves to feel?